Welcome to Tigramite’s documentation!¶

Indices and tables¶

TIGRAMITE¶

Tigramite is a causal time series analysis python package. It allows to efficiently estimate causal graphs from high-dimensional time series datasets (causal discovery) and to use these graphs for robust forecasting and the estimation and prediction of direct, total, and mediated effects. Causal discovery is based on linear as well as non-parametric conditional independence tests applicable to discrete or continuously-valued time series. Also includes functions for high-quality plots of the results. Please cite the following papers depending on which method you use:

Overview: Runge, J., Gerhardus, A., Varando, G. et al. Causal inference for time series. Nat Rev Earth Environ (2023). https://doi.org/10.1038/s43017-023-00431-y

PCMCI: J. Runge, P. Nowack, M. Kretschmer, S. Flaxman, D. Sejdinovic, Detecting and quantifying causal associations in large nonlinear time series datasets. Sci. Adv. 5, eaau4996 (2019). https://advances.sciencemag.org/content/5/11/eaau4996

PCMCI+: J. Runge (2020): Discovering contemporaneous and lagged causal relations in autocorrelated nonlinear time series datasets. Proceedings of the 36th Conference on Uncertainty in Artificial Intelligence, UAI 2020,Toronto, Canada, 2019, AUAI Press, 2020. http://auai.org/uai2020/proceedings/579_main_paper.pdf

LPCMCI: Gerhardus, A. & Runge, J. High-recall causal discovery for autocorrelated time series with latent confounders Advances in Neural Information Processing Systems, 2020, 33. https://proceedings.neurips.cc/paper/2020/hash/94e70705efae423efda1088614128d0b-Abstract.html

RPCMCI: Elena Saggioro, Jana de Wiljes, Marlene Kretschmer, Jakob Runge; Reconstructing regime-dependent causal relationships from observational time series. Chaos 1 November 2020; 30 (11): 113115. https://doi.org/10.1063/5.0020538

JPCMCIplus: W. Günther, U. Ninad, J. Runge, Causal discovery for time series from multiple datasets with latent contexts. UAI 2023

Generally: J. Runge (2018): Causal Network Reconstruction from Time Series: From Theoretical Assumptions to Practical Estimation. Chaos: An Interdisciplinary Journal of Nonlinear Science 28 (7): 075310. https://aip.scitation.org/doi/10.1063/1.5025050

Nature Communications Perspective paper: https://www.nature.com/articles/s41467-019-10105-3

Causal effects: J. Runge, Necessary and sufficient graphical conditions for optimal adjustment sets in causal graphical models with hidden variables, Advances in Neural Information Processing Systems, 2021, 34

Mediation class: J. Runge et al. (2015): Identifying causal gateways and mediators in complex spatio-temporal systems. Nature Communications, 6, 8502. http://doi.org/10.1038/ncomms9502

Mediation class: J. Runge (2015): Quantifying information transfer and mediation along causal pathways in complex systems. Phys. Rev. E, 92(6), 62829. http://doi.org/10.1103/PhysRevE.92.062829

CMIknn: J. Runge (2018): Conditional Independence Testing Based on a Nearest-Neighbor Estimator of Conditional Mutual Information. In Proceedings of the 21st International Conference on Artificial Intelligence and Statistics. http://proceedings.mlr.press/v84/runge18a.html

|

PCMCI causal discovery for time series datasets. |

|

LPCMCI is an algorithm for causal discovery in large-scale times series that allows for latent confounders and learns lag-specific causal relationships. |

|

RPCMCI class for extracting causal regimes and the associated graphs from time series data. |

J-PCMCIplus causal discovery for time series datasets from multiple contexts. |

|

|

Base class of conditional independence tests. |

Partial correlation test. |

|

|

Robust partial correlation test based on non-paranormal models. |

GPDC conditional independence test based on Gaussian processes and distance correlation. |

|

GPDC conditional independence test based on Gaussian processes and distance correlation. |

|

Conditional mutual information test based on nearest-neighbor estimator. |

|

Conditional mutual information test for discrete/categorical data. |

|

|

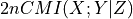

Oracle of conditional independence test X _|_ Y | Z given a graph. |

|

Partial correlation test for multivariate X and Y. |

G-squared conditional independence test for categorical data. |

|

Weighted partial correlation test. |

|

Flexible parametric conditional independence tests for continuous, categorical, or mixed data. |

|

Causal effect estimation. |

|

|

Base class for time series models. |

|

Linear mediation analysis for time series models. |

|

Prediction class for time series models. |

Tigramite data processing functions. |

|

Tigramite toymodels. |

|

Tigramite plotting package. |

tigramite.pcmci: PCMCI¶

- class tigramite.pcmci.PCMCI(dataframe, cond_ind_test, verbosity=0)[source]¶

PCMCI causal discovery for time series datasets.

PCMCI is a causal discovery framework for large-scale time series datasets. This class contains several methods. The standard PCMCI method addresses time-lagged causal discovery and is described in Ref [1] where also further sub-variants are discussed. Lagged as well as contemporaneous causal discovery is addressed with PCMCIplus and described in [5]. See the tutorials for guidance in applying these methods.

PCMCI has:

different conditional independence tests adapted to linear or nonlinear dependencies, and continuously-valued or discrete data ( implemented in

tigramite.independence_tests)(mostly) hyperparameter optimization

easy parallelization (separate script)

handling of masked time series data

false discovery control and confidence interval estimation

Notes

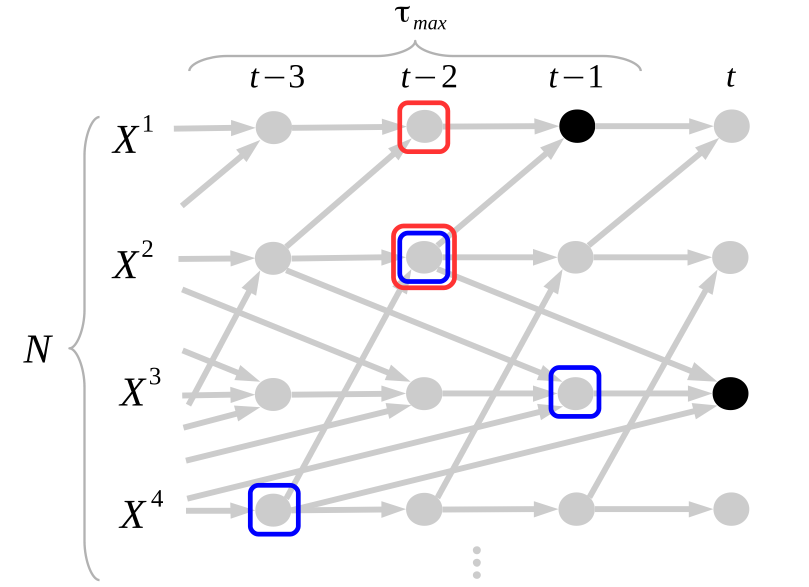

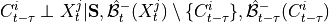

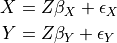

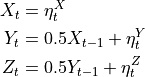

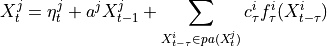

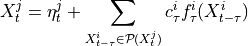

In the PCMCI framework, the dependency structure of a set of time series variables is represented in a time series graph as shown in the Figure. The nodes of a time series graph are defined as the variables at different times and a link indicates a conditional dependency that can be interpreted as a causal dependency under certain assumptions (see paper). Assuming stationarity, the links are repeated in time. The parents

of a variable are defined as the set of all nodes

with a link towards it (blue and red boxes in Figure).

of a variable are defined as the set of all nodes

with a link towards it (blue and red boxes in Figure).The different PCMCI methods estimate causal links by iterative conditional independence testing. PCMCI can be flexibly combined with any kind of conditional independence test statistic adapted to the kind of data (continuous or discrete) and its assumed dependency types. These are available in

tigramite.independence_tests.NOTE: MCI test statistic values define a particular measure of causal strength depending on the test statistic used. For example, ParCorr() results in normalized values between -1 and 1. However, if you are interested in quantifying causal effects, i.e., the effect of hypothetical interventions, you may better look at the causal effect estimation functionality of Tigramite.

References

- [1] J. Runge, P. Nowack, M. Kretschmer, S. Flaxman, D. Sejdinovic,

Detecting and quantifying causal associations in large nonlinear time series datasets. Sci. Adv. 5, eaau4996 (2019) https://advances.sciencemag.org/content/5/11/eaau4996

- [5] J. Runge,

Discovering contemporaneous and lagged causal relations in autocorrelated nonlinear time series datasets http://www.auai.org/~w-auai/uai2020/proceedings/579_main_paper.pdf

- Parameters:

dataframe (data object) – This is the Tigramite dataframe object. Among others, it has the attributes dataframe.values yielding a numpy array of shape ( observations T, variables N) and optionally a mask of the same shape.

cond_ind_test (conditional independence test object) – This can be ParCorr or other classes from

tigramite.independence_testsor an external test passed as a callable. This test can be based on the class tigramite.independence_tests.CondIndTest.verbosity (int, optional (default: 0)) – Verbose levels 0, 1, …

- all_parents¶

Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing the conditioning-parents estimated with PC algorithm.

- Type:

dictionary

- val_min¶

Dictionary of form val_min[j][(i, -tau)] = float containing the minimum test statistic value for each link estimated in the PC algorithm.

- Type:

dictionary

- pval_max¶

Dictionary of form pval_max[j][(i, -tau)] = float containing the maximum p-value for each link estimated in the PC algorithm.

- Type:

dictionary

- iterations¶

Dictionary containing further information on algorithm steps.

- Type:

dictionary

- N¶

Number of variables.

- Type:

int

- T¶

Time series sample length of dataset(s).

- Type:

dict

- get_graph_from_pmatrix(p_matrix, alpha_level, tau_min, tau_max, link_assumptions=None)[source]¶

Construct graph from thresholding the p_matrix at an alpha-level.

Allows to take into account link_assumptions.

- Parameters:

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values, optionally adjusted if fdr_method is not ‘none’.

alpha_level (float, optional (default: 0.05)) – Significance level at which the p_matrix is thresholded to get graph.

tau_mix (int) – Minimum time delay to test.

tau_max (int) – Maximum time delay to test.

link_assumptions (dict or None) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

- Returns:

graph – Causal graph, see description above for interpretation.

- Return type:

array of shape [N, N, tau_max+1]

- get_lagged_dependencies(selected_links=None, link_assumptions=None, tau_min=0, tau_max=1, val_only=False, alpha_level=0.05, fdr_method='none')[source]¶

Unconditional lagged independence tests.

Implements the unconditional lagged independence test (see [ 1]_).

Returns the matrices of test statistic values, (optionally corrected) p-values, and (optionally) confidence intervals. Also (new in 4.3) returns graph based on alpha_level (and optional FDR-correction).

- Parameters:

selected_links (dict or None) – Deprecated, replaced by link_assumptions

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

tau_min (int, default: 0) – Minimum time lag to test. Note that zero-lags are undirected.

tau_max (int, default: 1) – Maximum time lag. Must be larger or equal to tau_min.

val_only (bool, default: False) – Option to only compute dependencies and not p-values.

alpha_level (float, optional (default: 0.05)) – Significance level at which the p_matrix is thresholded to get graph.

fdr_method (str, optional (default: 'none')) – Correction method, currently implemented is Benjamini-Hochberg False Discovery Rate method (‘fdr_bh’).

- Returns:

graph (array of shape [N, N, tau_max+1]) – Causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values, optionally adjusted if fdr_method is not ‘none’.

conf_matrix (array of shape [N, N, tau_max+1,2]) – Estimated matrix of confidence intervals of test statistic values. Only computed if set in cond_ind_test, where also the percentiles are set.

- print_results(return_dict, alpha_level=0.05)[source]¶

Prints significant parents from output of MCI or PCMCI algorithms.

- Parameters:

return_dict (dict) –

- Dictionary of return values, containing keys

’p_matrix’

’val_matrix’

’conf_matrix’

alpha_level (float, optional (default: 0.05)) – Significance level.

- print_significant_links(p_matrix, val_matrix, conf_matrix=None, graph=None, ambiguous_triples=None, alpha_level=0.05)[source]¶

Prints significant links.

Used for output of PCMCI and PCMCIplus. For the latter also information on ambiguous links and conflicts is returned.

- Parameters:

alpha_level (float, optional (default: 0.05)) – Significance level.

p_matrix (array-like) – Must be of shape (N, N, tau_max + 1).

val_matrix (array-like) – Must be of shape (N, N, tau_max + 1).

conf_matrix (array-like, optional (default: None)) – Matrix of confidence intervals of shape (N, N, tau_max+1, 2).

graph (array-like) – Must be of shape (N, N, tau_max + 1).

ambiguous_triples (list) – List of ambiguous triples.

- return_parents_dict(graph, val_matrix, include_lagzero_parents=False)[source]¶

Returns dictionary of parents sorted by val_matrix.

If parents are unclear (edgemarks with ‘o’ or ‘x’, or middle mark ‘?’), then no parent is returned.

- Parameters:

graph (array of shape [N, N, tau_max+1]) – Causal graph, see description above for interpretation.

val_matrix (array-like) – Matrix of test statistic values. Must be of shape (N, N, tau_max + 1).

include_lagzero_parents (bool (default: False)) – Whether the dictionary should also return parents at lag zero.

- Returns:

parents_dict – Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing estimated parents.

- Return type:

dict

- return_significant_links(pq_matrix, val_matrix, alpha_level=0.05, include_lagzero_links=False)[source]¶

Returns list of significant links as well as a boolean matrix.

DEPRECATED. Will be removed in future.

- run_bivci(selected_links=None, link_assumptions=None, tau_min=0, tau_max=1, val_only=False, alpha_level=0.05, fdr_method='none')[source]¶

BivCI conditional independence tests.

Implements the BivCI test (see [1]).

Returns the matrices of test statistic values, (optionally corrected) p-values, and (optionally) confidence intervals. Also (new in 4.3) returns graph based on alpha_level (and optional FDR-correction).

- Parameters:

selected_links (dict or None) – Deprecated, replaced by link_assumptions

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

tau_min (int, default: 0) – Minimum time lag to test. Note that zero-lags are undirected.

tau_max (int, default: 1) – Maximum time lag. Must be larger or equal to tau_min.

val_only (bool, default: False) – Option to only compute dependencies and not p-values.

alpha_level (float, optional (default: 0.05)) – Significance level at which the p_matrix is thresholded to get graph.

fdr_method (str, optional (default: 'fdr_bh')) – Correction method, currently implemented is Benjamini-Hochberg False Discovery Rate method.

- Returns:

graph (array of shape [N, N, tau_max+1]) – Causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values, optionally adjusted if fdr_method is not ‘none’.

conf_matrix (array of shape [N, N, tau_max+1,2]) – Estimated matrix of confidence intervals of test statistic values. Only computed if set in cond_ind_test, where also the percentiles are set.

- run_fullci(selected_links=None, link_assumptions=None, tau_min=0, tau_max=1, val_only=False, alpha_level=0.05, fdr_method='none')[source]¶

FullCI conditional independence tests.

Implements the FullCI test (see [1]).

Returns the matrices of test statistic values, (optionally corrected) p-values, and (optionally) confidence intervals. Also (new in 4.3) returns graph based on alpha_level (and optional FDR-correction).

- Parameters:

selected_links (dict or None) – Deprecated, replaced by link_assumptions

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

tau_min (int, default: 0) – Minimum time lag to test. Note that zero-lags are undirected.

tau_max (int, default: 1) – Maximum time lag. Must be larger or equal to tau_min.

val_only (bool, default: False) – Option to only compute dependencies and not p-values.

alpha_level (float, optional (default: 0.05)) – Significance level at which the p_matrix is thresholded to get graph.

fdr_method (str, optional (default: 'none')) – Correction method, currently implemented is Benjamini-Hochberg False Discovery Rate method (‘fdr_bh’).

- Returns:

graph (array of shape [N, N, tau_max+1]) – Causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values, optionally adjusted if fdr_method is not ‘none’.

conf_matrix (array of shape [N, N, tau_max+1,2]) – Estimated matrix of confidence intervals of test statistic values. Only computed if set in cond_ind_test, where also the percentiles are set.

- run_mci(selected_links=None, link_assumptions=None, tau_min=0, tau_max=1, parents=None, max_conds_py=None, max_conds_px=None, val_only=False, alpha_level=0.05, fdr_method='none')[source]¶

MCI conditional independence tests.

Implements the MCI test (Algorithm 2 in [1]).

Returns the matrices of test statistic values, (optionally corrected) p-values, and (optionally) confidence intervals. Also (new in 4.3) returns graph based on alpha_level (and optional FDR-correction).

- Parameters:

selected_links (dict or None) – Deprecated, replaced by link_assumptions

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

tau_min (int, default: 0) – Minimum time lag to test. Note that zero-lags are undirected.

tau_max (int, default: 1) – Maximum time lag. Must be larger or equal to tau_min.

parents (dict or None) – Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} specifying the conditions for each variable. If None is passed, no conditions are used.

max_conds_py (int or None) – Maximum number of conditions of Y to use. If None is passed, this number is unrestricted.

max_conds_px (int or None) – Maximum number of conditions of Z to use. If None is passed, this number is unrestricted.

val_only (bool, default: False) – Option to only compute dependencies and not p-values.

alpha_level (float, optional (default: 0.05)) – Significance level at which the p_matrix is thresholded to get graph.

fdr_method (str, optional (default: 'none')) – Correction method, currently implemented is Benjamini-Hochberg False Discovery Rate method (‘fdr_bh’).

- Returns:

graph (array of shape [N, N, tau_max+1]) – Causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values, optionally adjusted if fdr_method is not ‘none’.

conf_matrix (array of shape [N, N, tau_max+1,2]) – Estimated matrix of confidence intervals of test statistic values. Only computed if set in cond_ind_test, where also the percentiles are set.

- run_pc_stable(selected_links=None, link_assumptions=None, tau_min=1, tau_max=1, save_iterations=False, pc_alpha=0.2, max_conds_dim=None, max_combinations=1)[source]¶

Lagged PC algorithm for estimating lagged parents of all variables.

Parents are made available as self.all_parents

- Parameters:

selected_links (dict or None) – Deprecated, replaced by link_assumptions

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

tau_min (int, default: 1) – Minimum time lag to test. Useful for multi-step ahead predictions. Must be greater zero.

tau_max (int, default: 1) – Maximum time lag. Must be larger or equal to tau_min.

save_iterations (bool, default: False) – Whether to save iteration step results such as conditions used.

pc_alpha (float or list of floats, default: [0.05, 0.1, 0.2, ..., 0.5]) – Significance level in algorithm. If a list or None is passed, the pc_alpha level is optimized for every variable across the given pc_alpha values using the score computed in cond_ind_test.get_model_selection_criterion().

max_conds_dim (int or None) – Maximum number of conditions to test. If None is passed, this number is unrestricted.

max_combinations (int, default: 1) – Maximum number of combinations of conditions of current cardinality to test in PC1 step.

- Returns:

all_parents – Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing estimated parents.

- Return type:

dict

- run_pcalg(selected_links=None, link_assumptions=None, pc_alpha=0.01, tau_min=0, tau_max=1, max_conds_dim=None, max_combinations=None, lagged_parents=None, max_conds_py=None, max_conds_px=None, max_conds_px_lagged=None, mode='standard', contemp_collider_rule='majority', conflict_resolution=True)[source]¶

Runs PC algorithm for time-lagged and contemporaneous causal discovery for time series.

For

mode='contemp_conds'this implements Steps 2-4 of the PCMCIplus method described in [5]. Formode='standard'this implements the standard PC algorithm adapted to time series.[5] J. Runge, Discovering contemporaneous and lagged causal relations in autocorrelated nonlinear time series datasets http://www.auai.org/~w-auai/uai2020/proceedings/579_main_paper.pdf

- Parameters:

selected_links (dict or None) – Deprecated, replaced by link_assumptions

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

lagged_parents (dictionary) – Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing additional conditions for each CI test. As part of PCMCIplus these are the superset of lagged parents estimated with the PC1 algorithm.

mode ({'standard', 'contemp_conds'}) – For

mode='contemp_conds'this implements Steps 2-4 of the PCMCIplus method. Formode='standard'this implements the standard PC algorithm adapted to time series.tau_min (int, optional (default: 0)) – Minimum time lag to test.

tau_max (int, optional (default: 1)) – Maximum time lag. Must be larger or equal to tau_min.

pc_alpha (float, optional (default: 0.01)) – Significance level.

contemp_collider_rule ({'majority', 'conservative', 'none'}) – Rule for collider phase to use. See the paper for details. Only ‘majority’ and ‘conservative’ lead to an order-independent algorithm.

conflict_resolution (bool, optional (default: True)) – Whether to mark conflicts in orientation rules. Only for True this leads to an order-independent algorithm.

max_conds_dim (int, optional (default: None)) – Maximum number of conditions to test. If None is passed, this number is unrestricted.

max_combinations (int) – Maximum number of combinations of conditions of current cardinality to test. Must be infinite (default for max_combinations=1) for consistency.

max_conds_py (int, optional (default: None)) – Maximum number of lagged conditions of Y to use in MCI tests. If None is passed, this number is unrestricted.

max_conds_px (int, optional (default: None)) – Maximum number of lagged conditions of X to use in MCI tests. If None is passed, this number is unrestricted.

max_conds_px_lagged (int, optional (default: None)) – Maximum number of lagged conditions of X when X is lagged in MCI tests. If None is passed, this number is equal to max_conds_px.

- Returns:

graph (array of shape [N, N, tau_max+1]) – Resulting causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values regarding adjacencies.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values regarding adjacencies.

sepsets (dictionary) – Separating sets. See paper for details.

ambiguous_triples (list) – List of ambiguous triples, only relevant for ‘majority’ and ‘conservative’ rules, see paper for details.

- run_pcalg_non_timeseries_data(pc_alpha=0.01, max_conds_dim=None, max_combinations=None, contemp_collider_rule='majority', conflict_resolution=True)[source]¶

Runs PC algorithm for non-time series data.

Simply calls run_pcalg with tau_min = tau_max = 0. Removes lags from output dictionaries.

- Parameters:

pc_alpha (float, optional (default: 0.01)) – Significance level.

contemp_collider_rule ({'majority', 'conservative', 'none'}) – Rule for collider phase to use. See the paper for details. Only ‘majority’ and ‘conservative’ lead to an order-independent algorithm.

conflict_resolution (bool, optional (default: True)) – Whether to mark conflicts in orientation rules. Only for True this leads to an order-independent algorithm.

max_conds_dim (int, optional (default: None)) – Maximum number of conditions to test. If None is passed, this number is unrestricted.

max_combinations (int) – Maximum number of combinations of conditions of current cardinality to test. Must be infinite (default for max_combinations=1) for consistency.

- Returns:

graph (array of shape [N, N, 1]) – Resulting causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, 1]) – Estimated matrix of test statistic values regarding adjacencies.

p_matrix (array of shape [N, N, 1]) – Estimated matrix of p-values regarding adjacencies.

sepsets (dictionary) – Separating sets. See paper for details.

ambiguous_triples (list) – List of ambiguous triples, only relevant for ‘majority’ and ‘conservative’ rules, see paper for details.

- run_pcmci(selected_links=None, link_assumptions=None, tau_min=0, tau_max=1, save_iterations=False, pc_alpha=0.05, max_conds_dim=None, max_combinations=1, max_conds_py=None, max_conds_px=None, alpha_level=0.05, fdr_method='none')[source]¶

Runs PCMCI time-lagged causal discovery for time series.

Wrapper around PC-algorithm function and MCI function.

Notes

The PCMCI causal discovery method is comprehensively described in [ 1]_, where also analytical and numerical results are presented. Here we briefly summarize the method.

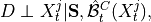

PCMCI estimates time-lagged causal links by a two-step procedure:

Condition-selection: For each variable

, estimate a

superset of parents

, estimate a

superset of parents  with the

iterative PC1 algorithm, implemented as

with the

iterative PC1 algorithm, implemented as run_pc_stable. The condition-selection step reduces the dimensionality and avoids conditioning on irrelevant variables.Momentary conditional independence (MCI)

here implemented as

run_mci. This step estimates the p-values and test statistic values for all links accounting for common drivers, indirect links, and autocorrelation.NOTE: MCI test statistic values define a particular measure of causal strength depending on the test statistic used. For example, ParCorr() results in normalized values between -1 and 1. However, if you are interested in quantifying causal effects, i.e., the effect of hypothetical interventions, you may better look at the causal effect estimation functionality of Tigramite.

PCMCI can be flexibly combined with any kind of conditional independence test statistic adapted to the kind of data (continuous or discrete) and its assumed dependency types. These are available in

tigramite.independence_tests.The main free parameters of PCMCI (in addition to free parameters of the conditional independence test statistic) are the maximum time delay

(

(tau_max) and the significance threshold in the condition-selection step (

(

pc_alpha). The maximum time delay depends on the application and should be chosen according to the maximum causal time lag expected in the complex system. We recommend a rather large choice that includes peaks in theget_lagged_dependenciesfunction. should not be seen as a significance test level in the

condition-selection step since the iterative hypothesis tests do not

allow for a precise assessment.

should not be seen as a significance test level in the

condition-selection step since the iterative hypothesis tests do not

allow for a precise assessment.  rather takes the role

of a regularization parameter in model-selection techniques. If a

list of values is given or

rather takes the role

of a regularization parameter in model-selection techniques. If a

list of values is given or pc_alpha=None, is

optimized using model selection criteria implemented in the respective

is

optimized using model selection criteria implemented in the respective

tigramite.independence_tests.Further optional parameters are discussed in [1].

Examples

>>> import numpy >>> from tigramite.pcmci import PCMCI >>> from tigramite.independence_tests import ParCorr >>> import tigramite.data_processing as pp >>> from tigramite.toymodels import structural_causal_processes as toys >>> numpy.random.seed(7) >>> # Example process to play around with >>> # Each key refers to a variable and the incoming links are supplied >>> # as a list of format [((driver, -lag), coeff), ...] >>> links_coeffs = {0: [((0, -1), 0.8)], 1: [((1, -1), 0.8), ((0, -1), 0.5)], 2: [((2, -1), 0.8), ((1, -2), -0.6)]} >>> data, _ = toys.var_process(links_coeffs, T=1000) >>> # Data must be array of shape (time, variables) >>> print (data.shape) (1000, 3) >>> dataframe = pp.DataFrame(data) >>> cond_ind_test = ParCorr() >>> pcmci = PCMCI(dataframe=dataframe, cond_ind_test=cond_ind_test) >>> results = pcmci.run_pcmci(tau_max=2, pc_alpha=None) >>> pcmci.print_significant_links(p_matrix=results['p_matrix'], val_matrix=results['val_matrix'], alpha_level=0.05) ## Significant parents at alpha = 0.05:

- Variable 0 has 1 link(s):

(0 -1): pval = 0.00000 | val = 0.588

- Variable 1 has 2 link(s):

(1 -1): pval = 0.00000 | val = 0.606 (0 -1): pval = 0.00000 | val = 0.447

- Variable 2 has 2 link(s):

(2 -1): pval = 0.00000 | val = 0.618 (1 -2): pval = 0.00000 | val = -0.499

- Parameters:

selected_links (dict or None) – Deprecated, replaced by link_assumptions

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

tau_min (int, optional (default: 0)) – Minimum time lag to test. Note that zero-lags are undirected.

tau_max (int, optional (default: 1)) – Maximum time lag. Must be larger or equal to tau_min.

save_iterations (bool, optional (default: False)) – Whether to save iteration step results such as conditions used.

pc_alpha (float, optional (default: 0.05)) – Significance level in algorithm.

max_conds_dim (int, optional (default: None)) – Maximum number of conditions to test. If None is passed, this number is unrestricted.

max_combinations (int, optional (default: 1)) – Maximum number of combinations of conditions of current cardinality to test in PC1 step.

max_conds_py (int, optional (default: None)) – Maximum number of conditions of Y to use. If None is passed, this number is unrestricted.

max_conds_px (int, optional (default: None)) – Maximum number of conditions of Z to use. If None is passed, this number is unrestricted.

alpha_level (float, optional (default: 0.05)) – Significance level at which the p_matrix is thresholded to get graph.

fdr_method (str, optional (default: 'fdr_bh')) – Correction method, currently implemented is Benjamini-Hochberg False Discovery Rate method.

- Returns:

graph (array of shape [N, N, tau_max+1]) – Causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values, optionally adjusted if fdr_method is not ‘none’.

conf_matrix (array of shape [N, N, tau_max+1,2]) – Estimated matrix of confidence intervals of test statistic values. Only computed if set in cond_ind_test, where also the percentiles are set.

- run_pcmciplus(selected_links=None, link_assumptions=None, tau_min=0, tau_max=1, pc_alpha=0.01, contemp_collider_rule='majority', conflict_resolution=True, reset_lagged_links=False, max_conds_dim=None, max_combinations=1, max_conds_py=None, max_conds_px=None, max_conds_px_lagged=None, fdr_method='none')[source]¶

Runs PCMCIplus time-lagged and contemporaneous causal discovery for time series.

Method described in [5]: http://www.auai.org/~w-auai/uai2020/proceedings/579_main_paper.pdf

[5] J. Runge, Discovering contemporaneous and lagged causal relations in autocorrelated nonlinear time series datasets http://www.auai.org/~w-auai/uai2020/proceedings/579_main_paper.pdf

Notes

The PCMCIplus causal discovery method is described in [5], where also analytical and numerical results are presented. In contrast to PCMCI, PCMCIplus can identify the full, lagged and contemporaneous, causal graph (up to the Markov equivalence class for contemporaneous links) under the standard assumptions of Causal Sufficiency, Faithfulness and the Markov condition.

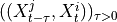

PCMCIplus estimates time-lagged and contemporaneous causal links by a four-step procedure:

1. Condition-selection (same as for PCMCI): For each variable

, estimate a superset of lagged parents

, estimate a superset of lagged parents  with the iterative PC1 algorithm,

implemented as

with the iterative PC1 algorithm,

implemented as run_pc_stable. The condition-selection step reduces the dimensionality and avoids conditioning on irrelevant variables.2. PC skeleton phase with contemporaneous conditions and Momentary conditional independence (MCI) tests: Iterate through subsets

of contemporaneous adjacencies and conduct MCI

conditional independence tests:

of contemporaneous adjacencies and conduct MCI

conditional independence tests:

here implemented as

run_pcalg. This step estimates the p-values and test statistic values for all lagged and contemporaneous adjacencies accounting for common drivers, indirect links, and autocorrelation.3. PC collider orientation phase: Orient contemporaneous collider motifs based on unshielded triples. Optionally apply conservative or majority rule (also based on MCI tests).

4. PC rule orientation phase: Orient remaining contemporaneous links based on PC rules.

In contrast to PCMCI, the relevant output of PCMCIplus is the array

graph. Its string entries are interpreted as follows:graph[i,j,tau]=-->for denotes a directed, lagged

causal link from

denotes a directed, lagged

causal link from  to

to  at lag

at lag

graph[i,j,0]=-->(andgraph[j,i,0]=<--) denotes a directed, contemporaneous causal link from to

to

graph[i,j,0]=o-o(andgraph[j,i,0]=o-o) denotes an unoriented, contemporaneous adjacency between and

and  indicating

that the collider and orientation rules could not be applied (Markov

equivalence)

indicating

that the collider and orientation rules could not be applied (Markov

equivalence)graph[i,j,0]=x-xand (graph[j,i,0]=x-x) denotes a conflicting, contemporaneous adjacency between and

and  indicating

that the directionality is undecided due to conflicting orientation

rules

indicating

that the directionality is undecided due to conflicting orientation

rules

Importantly,

p_matrixandval_matrixfor PCMCIplus quantify the uncertainty and strength, respectively, only for the adjacencies, but not for the directionality of contemporaneous links. Note that lagged links are always oriented due to time order.PCMCIplus can be flexibly combined with any kind of conditional independence test statistic adapted to the kind of data (continuous or discrete) and its assumed dependency types. These are available in

tigramite.independence_tests.The main free parameters of PCMCIplus (in addition to free parameters of the conditional independence tests) are the maximum time delay

(

(tau_max) and the significance threshold (

( pc_alpha).If a list or None is passed for

pc_alpha, the significance level is optimized for every graph across the givenpc_alphavalues using the score computed incond_ind_test.get_model_selection_criterion(). Since PCMCIplus outputs not a DAG, but an equivalence class of DAGs, first one member of this class is computed and then the score is computed as the average over all models fits for each variable in[0, ..., N]for that member. The score is the same for all members of the class.The maximum time delay depends on the application and should be chosen according to the maximum causal time lag expected in the complex system. We recommend a rather large choice that includes peaks in the

get_lagged_dependenciesfunction. Another important parameter iscontemp_collider_rule. Only if set tomajorityorconservative'' and together with ``conflict_resolution=True, PCMCIplus is fully order independent meaning that the order of the N variables in the dataframe does not matter. Last, the default optionreset_lagged_links=Falserestricts the detection of lagged causal links in Step 2 to the significant adjacencies found in Step 1, given by . For

. For

reset_lagged_links=True, all lagged links are considered again, which improves detection power for lagged links, but also leads to larger runtimes.Further optional parameters are discussed in [5].

- Parameters:

selected_links (dict or None) – Deprecated, replaced by link_assumptions

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

tau_min (int, optional (default: 0)) – Minimum time lag to test.

tau_max (int, optional (default: 1)) – Maximum time lag. Must be larger or equal to tau_min.

pc_alpha (float or list of floats, default: 0.01) – Significance level in algorithm. If a list or None is passed, the pc_alpha level is optimized for every graph across the given pc_alpha values ([0.001, 0.005, 0.01, 0.025, 0.05] for None) using the score computed in cond_ind_test.get_model_selection_criterion().

contemp_collider_rule ({'majority', 'conservative', 'none'}) – Rule for collider phase to use. See the paper for details. Only ‘majority’ and ‘conservative’ lead to an order-independent algorithm.

conflict_resolution (bool, optional (default: True)) – Whether to mark conflicts in orientation rules. Only for True this leads to an order-independent algorithm.

reset_lagged_links (bool, optional (default: False)) – Restricts the detection of lagged causal links in Step 2 to the significant adjacencies found in the PC1 algorithm in Step 1. For True, all lagged links are considered again, which improves detection power for lagged links, but also leads to larger runtimes.

max_conds_dim (int, optional (default: None)) – Maximum number of conditions to test. If None is passed, this number is unrestricted.

max_combinations (int, optional (default: 1)) – Maximum number of combinations of conditions of current cardinality to test in PC1 step.

max_conds_py (int, optional (default: None)) – Maximum number of lagged conditions of Y to use in MCI tests. If None is passed, this number is unrestricted.

max_conds_px (int, optional (default: None)) – Maximum number of lagged conditions of X to use in MCI tests. If None is passed, this number is unrestricted.

max_conds_px_lagged (int, optional (default: None)) – Maximum number of lagged conditions of X when X is lagged in MCI tests. If None is passed, this number is equal to max_conds_px.

fdr_method (str, optional (default: 'none')) – Correction method, default is Benjamini-Hochberg False Discovery Rate method.

- Returns:

graph (array of shape [N, N, tau_max+1]) – Resulting causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values regarding adjacencies.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values regarding adjacencies.

sepsets (dictionary) – Separating sets. See paper for details.

ambiguous_triples (list) – List of ambiguous triples, only relevant for ‘majority’ and ‘conservative’ rules, see paper for details.

tigramite.lpcmci: LPCMCI¶

- class tigramite.lpcmci.LPCMCI(dataframe, cond_ind_test, verbosity=0)[source]¶

LPCMCI is an algorithm for causal discovery in large-scale times series that allows for latent confounders and learns lag-specific causal relationships. The algorithm is introduced and explained in:

[1] Gerhardus, A. & Runge, J. High-recall causal discovery for autocorrelated time series with latent confounders. Advances in Neural Information Processing Systems, 2020, 33. https://proceedings.neurips.cc/paper/2020/hash/94e70705efae423efda1088614128d0b-Abstract.html

NOTE: This method is still EXPERIMENTAL since the default settings of hyperparameters are still being fine-tuned. We actually invite feedback on which work best in applications and numerical experiments. The main function, which applies the algorithm, is ‘run_lpcmci’.

Parameters passed to the constructor:

dataframe: Tigramite dataframe object that contains the the time series dataset bold{X}

cond_ind_test: A conditional independence test object that specifies which conditional independence test CI is to be used

verbosity: Controls the verbose output self.run_lpcmci() and the function it calls.

Parameters passed to self.run_lpcmci(): Note: The default values are still being tuned and some parameters might be removed in the future.

- link_assumptions: dict or None

Two-level nested dictionary such that link_assumptions[j][(i, lag_i)], where 0 <= j, i <= N-1 (with N the number of component time series) and -tau_max <= lag_i <= -tau_min, is a string which specifies background knowledge about the link from X^i_{t+lag_i} to X^j_t. These are the possibilities for this string and the corresponding claim:

‘-?>’ : X^i_{t+lag_i} is an ancestor of X^j_t. ‘–>’ : X^i_{t+lag_i} is an ancestor of X^j_t, and there is a link between X^i_{t+lag_i} and X^j_t ‘<?-’ : Only allowed for lag_i = 0. X^j_t is an ancestor of X^i_t. ‘<–’ : Only allowed for lag_i = 0. X^j_t is an ancestor of X^i_t, and there is a link between X^i_t and X^j_t ‘<?>’ : Neither X^i_{t+lag_i} is an ancestor of X^j_t nor the other way around ‘<->’ : Neither X^i_{t+lag_i} is an ancestor of X^j_t nor the other way around, and there is a link between X^i_{t+lag_i} and X^j_t ‘o?>’ : X^j_t is not an ancestor of X^i_{t+lag_i} (for lag_i < 0 this background knowledge is (for the default settings of self.run_lpcmci()) imposed automatically) ‘o->’ : X^j_t is not an ancestor of X^i_{t+lag_i}, and there is a link between X^i_{t+lag_i} and X^j_t ‘<?o’ : Only allowed for lag_i = 0. X^i_t is not an ancestor of X^j_t ‘<-o’ : Only allowed for lag_i = 0. X^i_t is not an ancestor of X^j_t, and there is a link between X^i_t and X^j_t ‘o-o’ : Only allowed for lag_i = 0. There is a link between X^i_t and X^j_t ‘o?o’ : Only allowed for lag_i = 0. No claim is made ‘’ : There is no link between X^i_{t+lag_i} and X^j_t.

Another way to specify the absent link is if the form of the link between (i, lag_i) and (j, 0) is not specified by the dictionary, that is, if either link_assumptions[j] does not exist or link_assumptions[j] does exist but link_assumptions[j][(i, lag_i)] does not exist, then the link between (i, lag_i) and (j, 0) is assumed to be absent.

tau_min: The assumed minimum time lag, i.e., links with a lag smaller than tau_min are assumed to be absent.

tau_max: The maximum considered time lag, i.e., the algorithm learns a DPAG on a time window [t-taumax, t] with tau_max + 1 time steps. It is not assumed that in the underlying time series DAG there are no links with a lag larger than tau_max.

pc_alpha: The significance level of conditional independence tests

n_preliminary_iterations: Determines the number of iterations in the preliminary phase of LPCMCI, corresponding to the ‘k’ in LPCMCI(k) in [1].

max_cond_px: Consider a pair of variables (X^i_{t-tau}, X^j_t) with tau > 0. In Algorithm S2 in [1] (here this is self._run_ancestral_removal_phase()), the algorithm does not test for conditional independence given subsets of apds_t(X^i_{t-tau}, X^j_t, C (G)) of cardinality higher than max_cond_px. In Algorithm S3 in [1] (here this is self._run_non_ancestral_removal_phase()), the algorithm does not test for conditional independence given subsets of napds_t (X^i_{t-tau}, X^j_t, C(G)) of cardinality higher than max_cond_px.

max_p_global: Restricts all conditional independence tests to conditioning sets with cardinality smaller or equal to max_p_global

max_p_non_ancestral: Restricts all conditional independence tests in the second removal phase (here this is self._run_dsep_removal_phase()) to conditioning sets with cardinality smaller or equal to max_p_global

max_q_global: For each ordered pair (X^i_{t-tau}, X^j_t) of adjacent variables and for each cardinality of the conditioning sets test at most max_q_global many conditioning sets (when summing over all tested cardinalities more than max_q_global tests may be made)

max_pds_set: In Algorithm S3 (here this is self._run_non_ancestral_removal_phase()), the algorithm tests for conditional independence given subsets of the relevant napds_t sets. If for a given link the set napds_t(X^j_t, X^i_{t-tau}, C(G)) has more than max_pds_set many elements (or, if the link is also tested in the opposite directed, if napds_t(X^i_{t-tau}, X^j_t, C(G)) has more than max_pds_set elements), this link is not tested.

prelim_with_collider_rules: If True: As in pseudocode If False: Line 22 of Algorithm S2 in [1] is replaced by line 18 of Algorithm S2 when Algorithm S2 is called from the preliminary phase (not in the last application of Algorithm S2 directly before Algorithm S3 is applied)

parents_of_lagged: If True: As in pseudocode If False: The default conditioning set is pa(X^j_t, C(G)) rather than pa({X^j_t, X^i_ {t-tau}, C(G)) for tau > 0

prelim_only: If True, stop after the preliminary phase. Can be used for detailed performance analysis

break_once_separated: If True: As in pseudocode If False: The break commands are removed from Algorithms S2 and S3 in in [1]

no_non_ancestral_phase: If True, do not execute Algorithm S3. Can be used for detailed performance analysis

use_a_pds_t_for_majority: If True: As in pseudocode If False: The search for separating sets instructed by the majority rule is made given subsets adj(X^j_t, C(G)) rather than subsets of apds_t(X^j_t, X^i_ {t-tau}, C(G))

- orient_contemp:

If orient_contemp == 1: As in pseudocode of Algorithm S2 in [1] If orient_contemp == 2: Also orient contemporaneous links in line 18 of Algorithm S2 If orient_comtemp == 0: Also not orient contemporaneous links in line 22 of Algorithm S2

- update_middle_marks:

If True: As in pseudoce of Algorithms S2 and S3 in [1] If False: The MMR rule is not applied

- prelim_rules:

If prelim_rules == 1: As in pseudocode of Algorithm S2 in [1] If prelim_rules == 0: Exclude rules R9^prime and R10^prime from line 18 in Algorithm S2

fix_all_edges_before_final_orientation: When one of max_p_global, max_p_non_ancestral, max_q_global or max_pds_set is not np.inf, the algorithm may terminate although not all middle marks are empty. All orientation rules are nevertheless sound, since the rules always check for the appropriate middle marks. If fix_all_edges_before_final_orientation is True, all middle marks are set to the empty middle mark by force, followed by another application of the rules.

auto_first: If True: As in pseudcode of Algorithms S2 and S3 in [1] If False: Autodependency links are not prioritized even before contemporaneous links

- remember_only_parents:

If True: As in pseudocode of Algorithm 1 If False: If X^i_{t-tau} has been marked as ancestor of X^j_t at any point of a preliminary iteration but the link between X^i_{t-tau} and X^j_t was removed later, the link is nevertheless initialized with a tail at X^i_{t-tau} in the re-initialization

- no_apr:

If no_apr == 0: As in pseudcode of Algorithms S2 and S3 in [1] If no_apr == 1: The APR is not applied by Algorithm S2, except in line 22 of its last call directly before the call of Algorithm S3 If no_apr == 2: The APR is never applied

- Return value of self.run_lpcmci():

- grapharray of shape (N, N, tau_max+1)

Resulting DPAG, representing the learned causal relationships.

- val_matrixarray of shape (N, N, tau_max+1)

Estimated matrix of test statistic values regarding adjacencies.

- p_matrixarray of shape [N, N, tau_max+1]

Estimated matrix of p-values regarding adjacencies.

A note on middle marks: For convenience (to have strings of the same lengths) we here internally denote the empty middle mark by ‘-’. For post-processing purposes all middle marks are set to the empty middle mark (here ‘-‘).

A note on wildcards: The middle mark wildcard ast and the edge mark wildcard are here represented as ‘*’’, the edge mark wildcard star as ‘+’.

- run_lpcmci(link_assumptions=None, tau_min=0, tau_max=1, pc_alpha=0.05, n_preliminary_iterations=1, max_cond_px=0, max_p_global=inf, max_p_non_ancestral=inf, max_q_global=inf, max_pds_set=inf, prelim_with_collider_rules=True, parents_of_lagged=True, prelim_only=False, break_once_separated=True, no_non_ancestral_phase=False, use_a_pds_t_for_majority=True, orient_contemp=1, update_middle_marks=True, prelim_rules=1, fix_all_edges_before_final_orientation=True, auto_first=True, remember_only_parents=True, no_apr=0)[source]¶

Run LPCMCI on the dataset and with the conditional independence test passed to the class constructor and with the options passed to this function.

tigramite.rpcmci: RPCMCI¶

- class tigramite.rpcmci.RPCMCI(dataframe, cond_ind_test=None, prediction_model=None, seed=None, verbosity=- 1)[source]¶

RPCMCI class for extracting causal regimes and the associated graphs from time series data.

Notes

The Regime-PCMCI causal discovery method is described in:

Elena Saggioro, Jana de Wiljes, Marlene Kretschmer, Jakob Runge; Reconstructing regime-dependent causal relationships from observational time series. Chaos 1 November 2020; 30 (11): 113115. https://doi.org/10.1063/5.0020538

The method iterates between two phases –a regime learning phase (optimization-based) and a causal discovery phase (PCMCI)– to identify regime dependent causal relationships. A persistent discrete regime variable is assumed that leads to a finite number of regimes within which stationarity can be assumed.

- Parameters:

dataframe (data object) – This is the Tigramite dataframe object. It has the attributes dataframe.values yielding a numpy array of shape ( observations T, variables N). For RPCMCI the mask will be ignored. You may use the missing_flag to indicate missing values.

cond_ind_test (conditional independence test object) – This can be ParCorr or other classes from

tigramite.independence_testsor an external test passed as a callable. This test can be based on the class tigramite.independence_tests.CondIndTest.prediction_model (sklearn model object) – For example, sklearn.linear_model.LinearRegression() for a linear regression model. This should be consistent with cond_ind_test, ie, use ParCorr() with a linear model and, eg, GPDC() with a GaussianProcessRegressor model, or CMIknn with NearestNeighbors model.

seed (int) – Random seed for annealing step.

verbosity (int, optional (default: -1)) – Verbose levels -1, 0, 1, …

- run_rpcmci(num_regimes, max_transitions, switch_thres=0.05, num_iterations=20, max_anneal=10, tau_min=1, tau_max=1, pc_alpha=0.2, alpha_level=0.01, n_jobs=- 1)[source]¶

- Run RPCMCI method for extracting causal regimes and the associated graphs from

time series data.

- Parameters:

num_regimes (int) – Number of assumed regimes.

max_transitions (int) – Maximum number of transitions within a single regime (persistency parameter).

switch_thres (float) – Switch threshold.

num_iterations (int) – Optimization iterations.

max_anneal (int) – Maximum annealing runs.

tau_min (int, optional (default: 0)) – Minimum time lag to test.

tau_max (int, optional (default: 1)) – Maximum time lag. Must be larger or equal to tau_min.

pc_alpha (float, optional (default: 0.2)) – Significance level in PCMCI.

alpha_level (float, optional (default: 0.05)) – Significance level in PCMCI at which the p_matrix is thresholded to get graph.

n_jobs (int, optional (default: -1)) – Number of CPUs to use in joblib parallization. Default n_jobs=-1 uses all available.

- Returns:

regimes (array of shape (n_regimes, T)) – One-hot encoded regime variable.

causal_results (dictionary) – Contains result of run_pcmci() after convergence.

diff_g_f (tuple) – Difference between two consecutive optimizations for all annealings and the optimal one with minimum objective value (see paper).

error_free_annealings (int) – Number of annealings that converged without error.

tigramite.jpcmciplus: JPCMCIplus¶

- class tigramite.jpcmciplus.JPCMCIplus(node_classification, **kwargs)[source]¶

J-PCMCIplus causal discovery for time series datasets from multiple contexts.

This class is based on the PCMCI framework as described in [1]. JPCMCIplus enables causal discovery for time series data from different contexts, i.e. datasets, where some of the variables describing the context might be unobserved. The method is described in detail in [10]. See the tutorial for guidance in applying the method.

References

- Parameters:

node_classification (dictionary) – Classification of nodes into system, context, or dummy nodes. Keys of the dictionary are from {0, …, N-1} where N is the number of nodes. Options for the values are “system”, “time_context”, “space_context”, “time_dummy”, or “space_dummy”.

- all_parents¶

Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing the conditioning-parents estimated with PC algorithm.

- Type:

dictionary

- val_min¶

Dictionary of form val_min[j][(i, -tau)] = float containing the minimum test statistic value for each link estimated in the PC algorithm.

- Type:

dictionary

- pval_max¶

Dictionary of form pval_max[j][(i, -tau)] = float containing the maximum p-value for each link estimated in the PC algorithm.

- Type:

dictionary

- iterations¶

Dictionary containing further information on algorithm steps.

- Type:

dictionary

- N¶

Number of variables.

- Type:

int

- T¶

Time series sample length of dataset(s).

- Type:

dict

- dummy_parents¶

Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing the dependence of the system nodes on the dummy nodes.

- Type:

dictionary or None

- observed_context_parents¶

Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing the dependence of the system nodes on the observed context nodes.

- Type:

dictionary or None

- dummy_ci_test¶

Conditional independence test used to test dependence between system nodes and dummy nodes. Currently, ParCorr is used with one-hot encoded dummies.

- Type:

conditional independence test object

- mode¶

- Type:

“system_search” or “context_search” or “dummy_search” (default: “system_search”)

- time_context_nodes¶

List with entries from {0, …, N-1} where N is the number of nodes. This is the list of the temporal context nodes which are assumed to be constant over the different datasets.

- Type:

list

- space_context_nodes¶

List with entries from {0, …, N-1} where N is the number of nodes. This is the list of the spatial context nodes which are assumed to be constant over time.

- time_dummy¶

Node corresponding to the temporal dummy variable.

- Type:

int or None (default: None)

- space_dummy¶

Node corresponding to the spatial dummy variable.

- Type:

int or None (default: None)

- system_nodes¶

List with entries from {0, …, N-1} where N is the number of nodes. This is the list of the system nodes.

- Type:

list

- add_found_context_link_assumptions(link_assumptions, tau_max)[source]¶

Helper function to add discovered links between system and observed context nodes to link_assumptions.

- assume_exogenous_context(link_assumptions, observed_context_nodes)[source]¶

Helper function to amend the link_assumptions to ensure that all context-system links are oriented such that the context variable is the parent.

- clean_link_assumptions(link_assumptions, tau_max)[source]¶

Helper function to amend the link_assumptions in the following ways * remove any links where dummy is the child * remove any lagged links to dummy, and space_context (not to observed time context) * and system - context links where context is the child * and any links between spatial and temporal context

- clean_system_link_assumptions(link_assumptions, tau_max)[source]¶

Helper function to remove any links to dummy and observed context nodes from link_assumptions. Add discovered links to contextual parents (from steps 1 and 2) to the link_assumptions.

- discover_dummy_system_links(link_assumptions, context_system_results, lagged_parents, tau_min=0, tau_max=1, pc_alpha=0.01, reset_lagged_links=False, max_conds_dim=None, max_conds_py=None, max_conds_px=None, max_conds_px_lagged=None, fdr_method='none')[source]¶

Step 2 of JPCMCIplus, i.e. discovery of links between observed (time and space) dummy nodes and system nodes through an application of the skeleton phase of PCMCIplus to this subset of nodes (dummy nodes and system nodes). See run_jpcmciplus for a description of the parameters.

- Parameters:

context_system_results (dictionary) – Output of discover_lagged_and_context_system_links, i.e. lagged and context parents together with the corresponding estimated test statistic values regarding adjacencies.

lagged_parents (dictionary) – Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing the conditioning-parents estimated with PC algorithm.

- Returns:

graph (array of shape [N, N, tau_max+1]) – Resulting causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values regarding adjacencies.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values regarding adjacencies.

parents (dictionary) – Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing the estimated dummy parents of the system nodes.

- discover_lagged_context_system_links(link_assumptions, tau_min=0, tau_max=1, pc_alpha=0.01, reset_lagged_links=False, max_conds_dim=None, max_combinations=1, max_conds_py=None, max_conds_px=None, max_conds_px_lagged=None, fdr_method='none')[source]¶

Step 1 of JPCMCIplus, i.e. discovery of links between observed context nodes and system nodes through an application of the skeleton phase of PCMCIplus to this subset of nodes (observed context nodes and system nodes). See run_jpcmciplus for a description of the parameters.

- Returns:

graph (array of shape [N, N, tau_max+1]) – Resulting causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values regarding adjacencies.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values regarding adjacencies.

parents (dictionary) – Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing the estimated context parents of the system nodes.

lagged_parents (dictionary) – Dictionary of form {0:[(0, -1), (3, -2), …], 1:[], …} containing the conditioning-parents estimated with PC algorithm.

- discover_system_system_links(link_assumptions, lagged_context_dummy_parents, tau_min=0, tau_max=1, pc_alpha=0.01, reset_lagged_links=False, max_conds_dim=None, max_conds_py=None, max_conds_px=None, max_conds_px_lagged=None, fdr_method='none')[source]¶

Step 4 of JPCMCIplus and orientation phase, i.e. discovery of links between system nodes given the knowledge about their context parents through an application of PCMCIplus to this subset of nodes (system nodes). See run_jpcmciplus for a description of the other parameters.

- Parameters:

lagged_context_dummy_parents (dictionary) – Dictionary containing lagged and (dummy and observed) context parents of the system nodes estimated during step 1 and step 2 of J-PCMCI+.

- Returns:

graph (array of shape [N, N, tau_max+1]) – Resulting causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values regarding adjacencies.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values regarding adjacencies.

sepset (dictionary) – Separating sets. See paper for details.

ambiguous_triples (list) – List of ambiguous triples, only relevant for ‘majority’ and ‘conservative’ rules, see paper for details.

- remove_dummy_link_assumptions(link_assumptions)[source]¶

Helper function to remove any links to dummy from link_assumptions.

- run_jpcmciplus(contemp_collider_rule='majority', link_assumptions=None, tau_min=0, tau_max=2, pc_alpha=0.01, conflict_resolution=True, reset_lagged_links=False, max_conds_dim=None, max_combinations=1, max_conds_py=None, max_conds_px=None, max_conds_px_lagged=None, fdr_method='none')[source]¶

Runs JPCMCIplus time-lagged and contemporaneous causal discovery for time series from multiple contexts. Method described in [10]:

W. Günther, U. Ninad, J. Runge, Causal discovery for time series from multiple datasets with latent contexts. UAI 2023

Notes

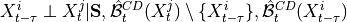

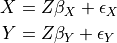

The JPCMCIplus causal discovery method is described in [10], where also analytical and numerical results are presented. JPCMCIplus can identify the joint causal graph over multiple datasets containing time series data from different contexts under the standard assumptions of Causal Sufficiency, Faithfulness and the Markov condition, as well as some background knowledge assumptions. JPCMCIplus estimates time-lagged and contemporaneous causal links from context to system variables and in between system variables by a four-step procedure:

1. Discovery of supersets of the lagged parents of the system and observed temporal context nodes by running the

lagged phase on this subset of nodes to obtain

lagged phase on this subset of nodes to obtain  .

.2. Next, the MCI test is run on pairs of system and context nodes conditional on subsets of system and context, i.e. perform MCI tests for pairs

,

,

,

,  for all

for all  ,

,

with

being a subset of the contemporaneous adjacencies

being a subset of the contemporaneous adjacencies  and

and

are the lagged adjacencies from step one. If

are the lagged adjacencies from step one. If  is a

spatial context variable, we only have to test the contemporaneous pairs

is a

spatial context variable, we only have to test the contemporaneous pairs

,

,  for all

for all  .

If

.

If  and

and  are conditionally independent, all lagged links between

are conditionally independent, all lagged links between  and

and

are also removed for all

are also removed for all  .

.3. Perform MCI tests on all system-dummy pairs conditional on the superset of lagged links, the discovered contemporaneous context adjacencies, as well as on subsets of contemporaneous system links, i.e. test for

,

,  for all

for all  , i.e.

, i.e.

where

and

and  are the lagged and contextual adjacencies found in the previous step.

If

are the lagged and contextual adjacencies found in the previous step.

If  and

and  are found to be conditionally independence, links between

are found to be conditionally independence, links between  and

and

are removed for all

are removed for all  .

By assumption context node is the parent in all system-context links.

.

By assumption context node is the parent in all system-context links.4. Finally, we perform MCI tests on all system pairs conditional on discovered lagged, context and dummy adjacencies, as well as on subsets of contemporaneous system links and orientation phase. In more detail, we perform MCI test for pairs

,

,  for all

for all

, i.e.

, i.e.

where

and

and  are the lagged, contextual, and dummy adjacencies found in the previous steps.

Finally, all remaining edges (without expert knowledge) are oriented using the PCMCI+ orientation phase while

making use of all triples involving one context or dummy variable and two system variables as in the non-time

series case.

are the lagged, contextual, and dummy adjacencies found in the previous steps.

Finally, all remaining edges (without expert knowledge) are oriented using the PCMCI+ orientation phase while

making use of all triples involving one context or dummy variable and two system variables as in the non-time

series case.JPCMCIplus can be flexibly combined with any kind of conditional independence test statistic adapted to the kind of data (continuous or discrete) and its assumed dependency types. These are available in

tigramite.independence_tests. See PCMCIplus for a description of the parameters of JPCMCIplus. Also, guidance on best practices for setting these parameters is given there.- Parameters:

link_assumptions (dict) – Dictionary of form {j:{(i, -tau): link_type, …}, …} specifying assumptions about links. This initializes the graph with entries graph[i,j,tau] = link_type. For example, graph[i,j,0] = ‘–>’ implies that a directed link from i to j at lag 0 must exist. Valid link types are ‘o-o’, ‘–>’, ‘<–’. In addition, the middle mark can be ‘?’ instead of ‘-’. Then ‘-?>’ implies that this link may not exist, but if it exists, its orientation is ‘–>’. Link assumptions need to be consistent, i.e., graph[i,j,0] = ‘–>’ requires graph[j,i,0] = ‘<–’ and acyclicity must hold. If a link does not appear in the dictionary, it is assumed absent. That is, if link_assumptions is not None, then all links have to be specified or the links are assumed absent.

tau_min (int, optional (default: 0)) – Minimum time lag to test.

tau_max (int, optional (default: 1)) – Maximum time lag. Must be larger or equal to tau_min.

pc_alpha (float or list of floats, default: 0.01) – Significance level in algorithm. If a list or None is passed, the pc_alpha level is optimized for every graph across the given pc_alpha values ([0.001, 0.005, 0.01, 0.025, 0.05] for None) using the score computed in cond_ind_test.get_model_selection_criterion().

contemp_collider_rule ({'majority', 'conservative', 'none'}) – Rule for collider phase to use. See the paper for details. Only ‘majority’ and ‘conservative’ lead to an order-independent algorithm.

conflict_resolution (bool, optional (default: True)) – Whether to mark conflicts in orientation rules. Only for True this leads to an order-independent algorithm.

reset_lagged_links (bool, optional (default: False)) – Restricts the detection of lagged causal links in Step 2 to the significant adjacencies found in the PC1 algorithm in Step 1. For True, all lagged links are considered again, which improves detection power for lagged links, but also leads to larger runtimes.

max_conds_dim (int, optional (default: None)) – Maximum number of conditions to test. If None is passed, this number is unrestricted.

max_combinations (int, optional (default: 1)) – Maximum number of combinations of conditions of current cardinality to test. Defaults to 1 for PC_1 algorithm. For original PC algorithm a larger number, such as 10, can be used.

max_conds_py (int, optional (default: None)) – Maximum number of lagged conditions of Y to use in MCI tests. If None is passed, this number is unrestricted.

max_conds_px (int, optional (default: None)) – Maximum number of lagged conditions of X to use in MCI tests. If None is passed, this number is unrestricted.

max_conds_px_lagged (int, optional (default: None)) – Maximum number of lagged conditions of X when X is lagged in MCI tests. If None is passed, this number is equal to max_conds_px.

fdr_method (str, optional (default: 'none')) – Correction method, default is Benjamini-Hochberg False Discovery Rate method.

- Returns:

graph (array of shape [N, N, tau_max+1]) – Resulting causal graph, see description above for interpretation.

val_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of test statistic values regarding adjacencies.

p_matrix (array of shape [N, N, tau_max+1]) – Estimated matrix of p-values regarding adjacencies.

sepset (dictionary) – Separating sets. See paper for details.

ambiguous_triples (list) – List of ambiguous triples, only relevant for ‘majority’ and ‘conservative’ rules, see paper for details.

tigramite.independence_tests: Conditional independence tests¶

Base class:

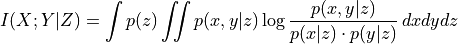

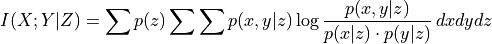

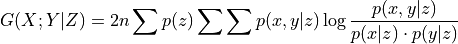

- class tigramite.independence_tests.independence_tests_base.CondIndTest(seed=42, mask_type=None, significance='analytic', fixed_thres=None, sig_samples=500, sig_blocklength=None, confidence=None, conf_lev=0.9, conf_samples=100, conf_blocklength=None, recycle_residuals=False, verbosity=0)[source]¶

Base class of conditional independence tests.

Provides useful general functions for different independence tests such as shuffle significance testing and bootstrap confidence estimation. Also handles masked samples. Other test classes can inherit from this class.

- Parameters:

seed (int, optional(default = 42)) – Seed for RandomState (default_rng)

mask_type (str, optional (default = None)) – Must be in {None, ‘y’,’x’,’z’,’xy’,’xz’,’yz’,’xyz’} Masking mode: Indicators for which variables in the dependence measure I(X; Y | Z) the samples should be masked. If None, the mask is not used. Explained in tutorial on masking and missing values.

significance (str, optional (default: 'analytic')) – Type of significance test to use. In this package ‘analytic’, ‘fixed_thres’ and ‘shuffle_test’ are available.

fixed_thres (float, optional (default: 0.1)) – Deprecated.

sig_samples (int, optional (default: 500)) – Number of samples for shuffle significance test.

sig_blocklength (int, optional (default: None)) – Block length for block-shuffle significance test. If None, the block length is determined from the decay of the autocovariance as explained in [1].

confidence (str, optional (default: None)) – Specify type of confidence estimation. If False, numpy.nan is returned. ‘bootstrap’ can be used with any test, for ParCorr also ‘analytic’ is implemented.

conf_lev (float, optional (default: 0.9)) – Two-sided confidence interval.

conf_samples (int, optional (default: 100)) – Number of samples for bootstrap.

conf_blocklength (int, optional (default: None)) – Block length for block-bootstrap. If None, the block length is determined from the decay of the autocovariance as explained in [1].

recycle_residuals (bool, optional (default: False)) – Specifies whether residuals should be stored. This may be faster, but can cost considerable memory.

verbosity (int, optional (default: 0)) – Level of verbosity.

- get_analytic_confidence(value, df, conf_lev)[source]¶

Base class assumption that this is not implemented. Concrete classes should override when possible.

- get_analytic_significance(value, T, dim)[source]¶

Base class assumption that this is not implemented. Concrete classes should override when possible.

- get_bootstrap_confidence(array, xyz, dependence_measure=None, conf_samples=100, conf_blocklength=None, conf_lev=0.95, data_type=None, verbosity=0)[source]¶

Perform bootstrap confidence interval estimation.

With conf_blocklength > 1 or None a block-bootstrap is performed.

- arrayarray-like

data array with X, Y, Z in rows and observations in columns

- xyzarray of ints

XYZ identifier array of shape (dim,).

- dependence_measurefunction (default = self.get_dependence_measure)